之前介紹的影像處理主要是為了撰寫本次介紹的圖像分割,影像分割現今運用非常廣泛,雖然人工智慧當中有Faster R-CNN處理方法,但目前誰也無法保證哪個才是最佳演算法,因此兩者都必須學習。這次主要參考OpenCV源碼[3]。

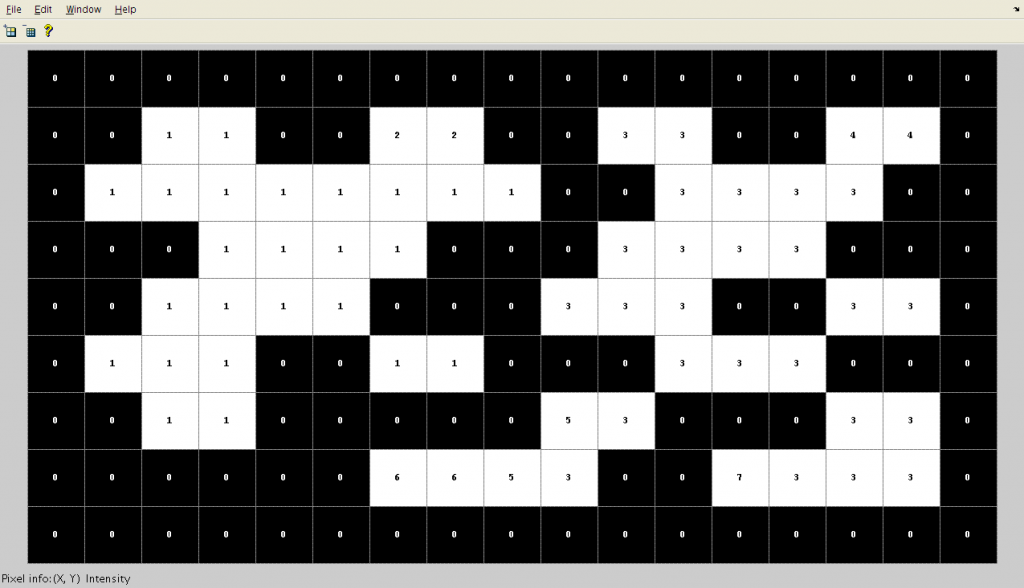

原先連通法主要將圖像二值化,再給予同區塊的白色像素都設定同一個標記,主要分為四方連通和八方連通。這裡使用八方連通法,而走訪由左至右由上而下,只需要走訪中心點的四個相連點(這裡使用左 + 上排)找出最小標記,若未標記則給予中心點新的標記,走訪第一次如下圖,可以看到白色部分都已被標記。

來源[4]

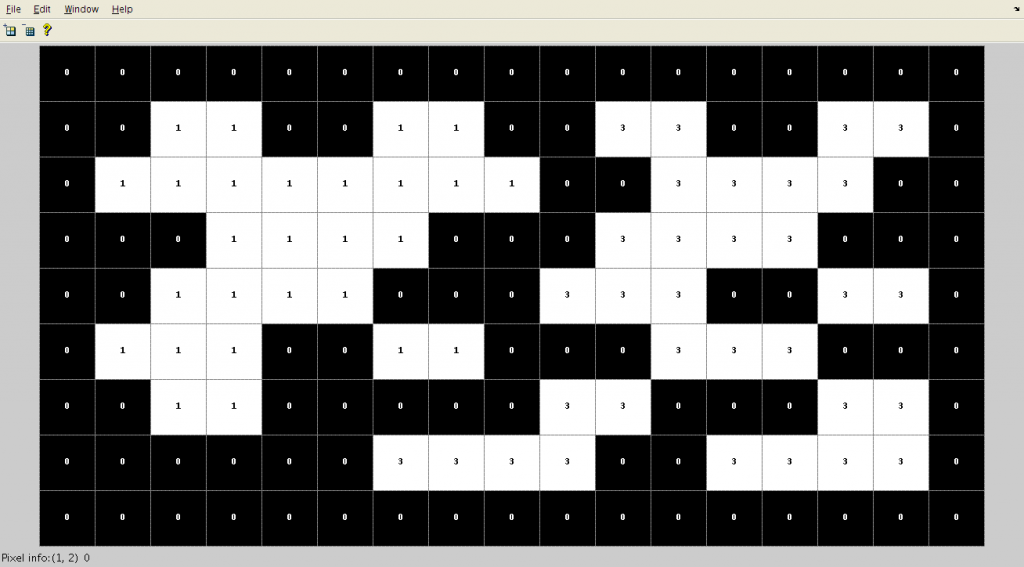

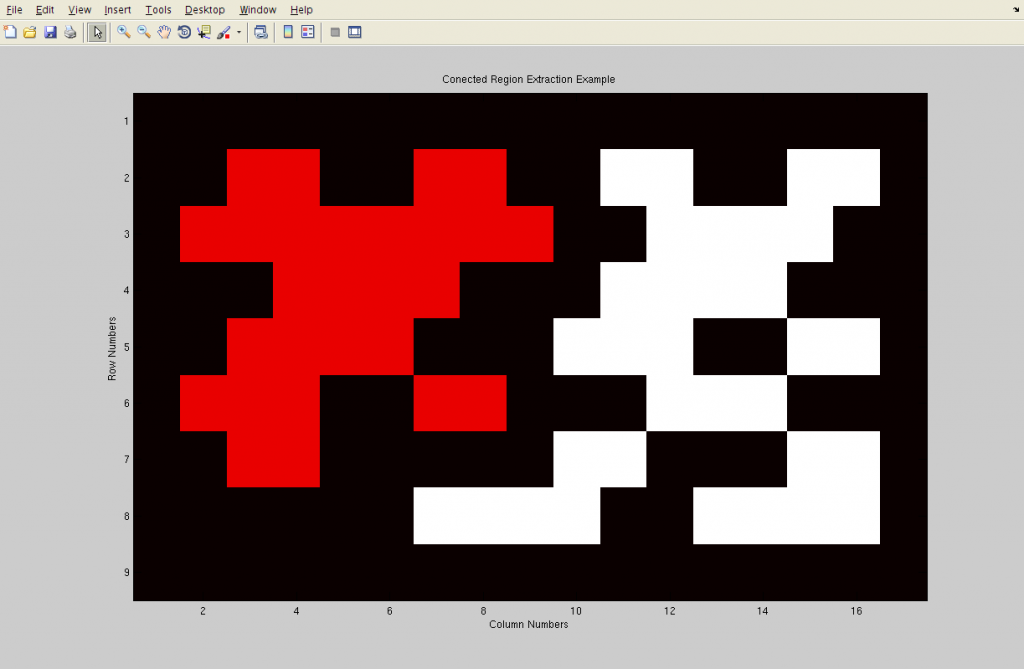

接著再從頭走訪一次去作合併,因為第一次走訪時依序慢慢給予標記,也就是說第一次走訪下方和右方未標記的也可能是白色,所以在走訪一次比大小去作合併,就變為八方連通了,結果如下圖一,上色為圖二。

圖一,來源[4]。

圖二,來源[4]。

這裡所使用的方法則是先計算所有像素與四邊的像素差,再由小排到大後依序去標記連通。而這裡更新標記不同的是,它會多一個變數rank來記錄優先順序(通常先處理像素的rank會較大),合併時比較rank,給予較大rank的標記。

GraphNode:結構是紀錄一個像素的rank優先程度、連結的index和目前大小。Find:更新連結index和取得連結的index。Merge:合併兩個像素(區域)。NumSets:區域數量。GetSize:取得區域大小。#pragma once

#ifndef GRAPH_TREE_H

#define GRAPH_TREE_H

#include "general.h"

typedef struct {

UINT32 rank;

UINT32 next;

UINT32 size;

} GraphNode;

class GraphTree

{

public:

GraphTree(C_UINT32 nodeSize);

~GraphTree();

UINT32 Find(C_UINT32 index);

void Merge(C_UINT32 index1, C_UINT32 index2);

UINT32 NumSets() const { return _nodeSize; };

UINT32 GetSize(C_UINT32 index) const { return _graphNodes[index].size; };

private:

UINT32 _nodeSize;

GraphNode* _graphNodes;

};

#endif

GraphTree.cpp

#include "GraphTree.h"

GraphTree::GraphTree(C_UINT32 nodeSize)

{

_nodeSize = nodeSize;

_graphNodes = new GraphNode[nodeSize];

for (UINT32 index = 0; index < nodeSize; index++)

{

GraphNode* graphNode = &_graphNodes[index];

graphNode->next = index;

graphNode->rank = 0;

graphNode->size = 1;

}

}

UINT32 GraphTree::Find(C_UINT32 index)

{

UINT32 next = index;

while (next != _graphNodes[next].next)

{

next = _graphNodes[next].next;

}

_graphNodes[index].next = next;

return next;

}

void GraphTree::Merge(C_UINT32 index1, C_UINT32 index2)

{

GraphNode& graphNode1 = _graphNodes[index1];

GraphNode& graphNode2 = _graphNodes[index2];

if (graphNode1.rank > graphNode2.rank)

{

graphNode2.next = index1;

graphNode1.size += graphNode2.size;

}

else

{

graphNode1.next = index2;

graphNode2.size += graphNode1.size;

if (graphNode1.rank == graphNode2.rank)

{

graphNode2.rank++;

}

}

_nodeSize--;

}

GraphTree::~GraphTree()

{

delete[] _graphNodes;

}

在論文當中有對於闊值的演變詳細解說,這裡使用[2]提到使用公式為面積(閥值) / 周長 + 像素差,這裡可以想像當下點與其他排序點的差距越來越遠來理解,因為隨著合併的像素越多周長就越大相對的合併條件也會越嚴謹(因排序完所以前面影響越大,後面影響越小)。

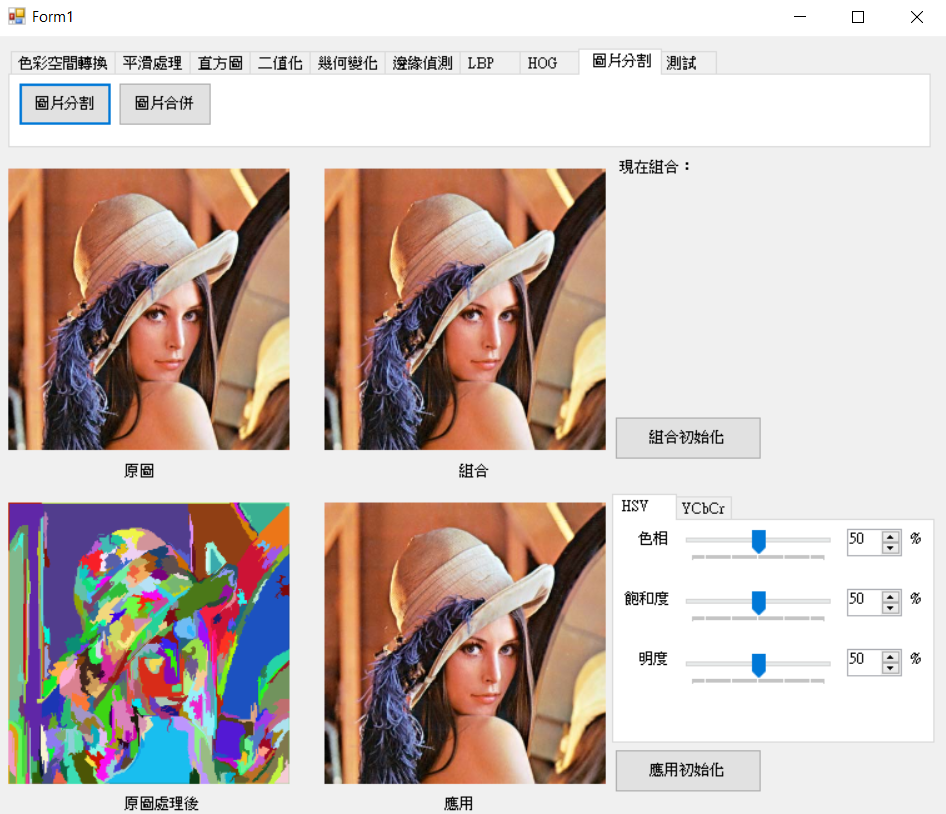

SegmentImage:取得處理完的連通資料。SegmentGraph:排序並將符合條件的區域合併(合併條件當下資料小於中心(連通標籤)和鄰邊(連通標籤)闊值)。Visualization:可視化連通資料。void Segment::SegmentImage(C_UCHAE* src, UCHAE* pur

, C_UINT32 width, C_UINT32 height

, C_FLOAT sigma, C_FLOAT threshold, C_UINT32 minSize

, GraphTree* graphTree)

{

assert(src != nullptr && pur != nullptr);

assert(width > 0 && height > 0);

assert(graphTree != nullptr);

assert(graphTree->NumSets() == width * height);

C_UINT32 size = static_cast<UINT32>(ceil(4 * sigma)) + 1;

MNDT::BlurGauss24bit(src, pur

, width, height

, size, sigma);

Image purImage(pur, width, height, MNDT::ImageType::BGR_24BIT);

Edge* edges = new Edge[width * height * 4];

UINT32 edgeSize = 0;

// 1. get neighbors

for (UINT32 row = 0; row < height; row++)

{

for (UINT32 col = 0; col < width; col++)

{

UINT32 nowIndex = row * width + col;

Pixel nowPix = purImage.GetPixel(row, col);

Edge* edge = nullptr;

// top

if (row > 0)

{

edge = &edges[edgeSize];

edge->centerIndex = nowIndex;

edge->linkIndex = (row - 1) * width + col;

edge->weights = Diff(nowPix, purImage.GetPixel(row - 1, col));

edgeSize++;

}

// top left

if (row > 0 && col > 0)

{

edge = &edges[edgeSize];

edge->centerIndex = nowIndex;

edge->linkIndex = (row - 1) * width + col - 1;

edge->weights = Diff(nowPix, purImage.GetPixel(row - 1, col - 1));

++edgeSize;

}

// top right

if (row > 0 && col < width - 1)

{

edge = &edges[edgeSize];

edge->centerIndex = nowIndex;

edge->linkIndex = (row - 1) * width + col + 1;

edge->weights = Diff(nowPix, purImage.GetPixel(row - 1, col + 1));

++edgeSize;

}

// left

if (col > 0)

{

edge = &edges[edgeSize];

edge->centerIndex = nowIndex;

edge->linkIndex = row * width + col - 1;

edge->weights = Diff(nowPix, purImage.GetPixel(row, col - 1));

++edgeSize;

}

}

}

// step 2.3

// 連通法

SegmentGraph(graphTree

, edges, edgeSize

, threshold);

// 4. merge region if the region is smaller than minSize params

for (UINT32 index = 0; index < edgeSize; index++)

{

const Edge& edge = edges[index];

C_UINT32 centerIndex = graphTree->Find(edge.centerIndex);

C_UINT32 linkIndex = graphTree->Find(edge.linkIndex);

if (centerIndex != linkIndex

&& (graphTree->GetSize(centerIndex) < minSize || graphTree->GetSize(linkIndex) < minSize))

{

graphTree->Merge(centerIndex, linkIndex);

}

}

delete[] edges;

edges = nullptr;

}

void Segment::SegmentGraph(GraphTree* graphTree

, Edge* edges, C_UINT32 edgeSize

, C_FLOAT threshold)

{

// 2. sort

std::sort(edges, edges + edgeSize

, [](const Edge& edge1, const Edge& edge2) { return edge1.weights < edge2.weights; });

double* thresholds = new double[graphTree->NumSets()];

for (UINT32 index = 0; index < graphTree->NumSets(); index++)

{

thresholds[index] = Threshold(threshold, 1);

}

// 3. merge region

for (UINT32 index = 0; index < edgeSize; index++)

{

const Edge& edge = edges[index];

UINT32 centerIndex = graphTree->Find(edge.centerIndex);

C_UINT32 linkIndex = graphTree->Find(edge.linkIndex);

if (centerIndex != linkIndex

&& edge.weights < thresholds[centerIndex]

&& edge.weights < thresholds[linkIndex])

{

graphTree->Merge(centerIndex, linkIndex);

centerIndex = graphTree->Find(centerIndex);

thresholds[centerIndex] = edge.weights + Threshold(threshold, static_cast<float>(graphTree->GetSize(centerIndex)));

}

}

delete[] thresholds;

thresholds = nullptr;

}

void Segment::Visualization(Image& image

, GraphTree* graphTree)

{

C_UINT32 size = image.Height() * image.Width();

Pixel *pixs = new Pixel[size];

for (UINT32 index = 0; index < size; index++)

{

pixs[index] = GetColor();

}

for (UINT32 row = 0; row < image.Height(); row++)

{

for (UINT32 col = 0; col < image.Width(); col++)

{

UINT32 index = graphTree->Find(row * image.Width() + col);

image.SetPixel(row, col, pixs[index]);

}

}

delete[] pixs;

pixs = nullptr;

}

在論文當中講的很詳細,而做起來也並不困難,對於我來說最不好理解的部分大概是未改進的閥值,主要是英文太爛,真的要好好加強英文未來才能更快速的讀論文,下次會介紹已此論文演變的Selective Search for Object Recognition論文。現在文章改為講解演算法部分,所以有些小地方可能就會略過,若有興趣可去Github看源碼,有問題或錯誤歡迎提問。

[1]P. Felzenszwalb, D. Huttenlocher,"Efficient Graph-Based Image Segmentation"

International Journal of Computer Vision, Vol. 59, No. 2, September 2004

[2]TTransposition(2014).

图像分割—基于图的图像分割(Graph-Based Image Segmentation) from:https://blog.csdn.net/ttransposition/article/details/38024557 (2018.11.29)

[3]TTransposition(2014). 图像分割—基于图的图像分割(OpenCV源码注解) form:https://blog.csdn.net/ttransposition/article/details/38024605 (2018.11.29)

[4]維基百科(2017).連通分量標記from:https://zh.wikipedia.org/wiki/%E8%BF%9E%E9%80%9A%E5%88%86%E9%87%8F%E6%A0%87%E8%AE%B0 (2018.11.29)